Welcome to your new American Community Survey Data platform.

For most demographers, ‘the cloud’ and ‘big data’ are nothing more than corporate buzzwords, often found in various combinations with business-speak nonsense such as ‘best practices’ and ‘leveraging core competencies’. Most of the time we’re consumed with our comfortable world of American Fact Finder searches and Microsoft Access queries, and that suits our purposes just fine. We’re all isolated in our various niche’s – but since we all essentially rely on the same data to do our jobs, there ends up being an excessive duplication of effort. Of course, it doesn’t have to be that way.

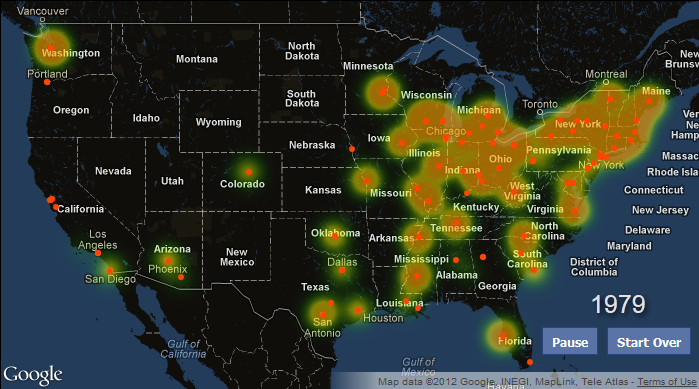

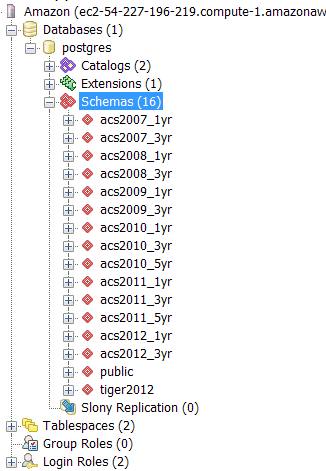

The brilliant folks at www.censusreporter.org, a census data resource for journalists, have finally succeeded in making American Community Survey data available to the masses (that’s us). They have compiled an extensive database spanning the years 2005-2012, including the most current 1-year, 3-year, and 5-year datasets. for a diagram of the data currently available.

Imagine being able to instantly pull data tables from the latest 5-year release without having to spend hours downloading and formatting an unwieldy chunk of data. That work has already been done – you may as well take advantage of it. The only thing you have to do is follow a few easy instructions to set up your server in the cloud.

Did I say easy? Kinda easy. Relatively easy. Okay, perhaps a little intimidating to those not familiar with the technology. But that’s why I’m here; to walk you through each step of the process. These are instructions adapted from Census Reporter’s blog post , that I have updated to reflect changes to Amazon’s interface. I’ve also added additional instructions so that demographers will be able to use the data with the tool they are most comfortable with; namely, Microsoft Access.

Step 1. . This is the only step I’m not going to hold your hand through. Just click the orange ‘Sign Up’ button at the top-center of the page. You’re going to end up spending some money, so you’ll probably need to put in some credit card information at some point. The good news is that you can test this whole setup for a few days and probably spend less than $5. Or you can just try this tutorial, decide it’s not for you, and likely spend less than $1.

Step 2. Now that you’ve created an account, you’re going to want to visit your . There’s a lot of crazy stuff on that page, but don’t worry. The only item that interests you is EC2. Click on it.

This will take you to the EC2 dashboard page. There’s no sense explaining too much of this right now. Let’s just dive in. Click ‘Launch Instance’ to start.

You’ve now been taken to a page where you’ll be asked to choose which Amazon Machine Image (AMI) you would like to use.

Before you do anything else, please refer to the upper-right corner of your webpage and choose N.Virginia for the geographic region. (TMI: For whatever reason, when I created this tutorial the snapshot of the census reporter database wasn’t available in some of the other regions.)

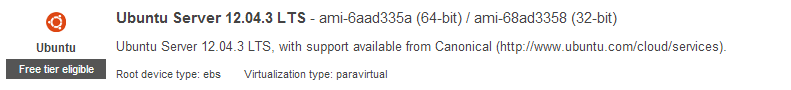

Now, back to picking out our AMI. This is somewhat like choosing what type of computer you want to run your database on in the cloud. Census Reporter recommends you choose the Ubuntu Server 12.04.3 LTS.

Make sure 64-bit is selected, and then press the ‘Select’ button.

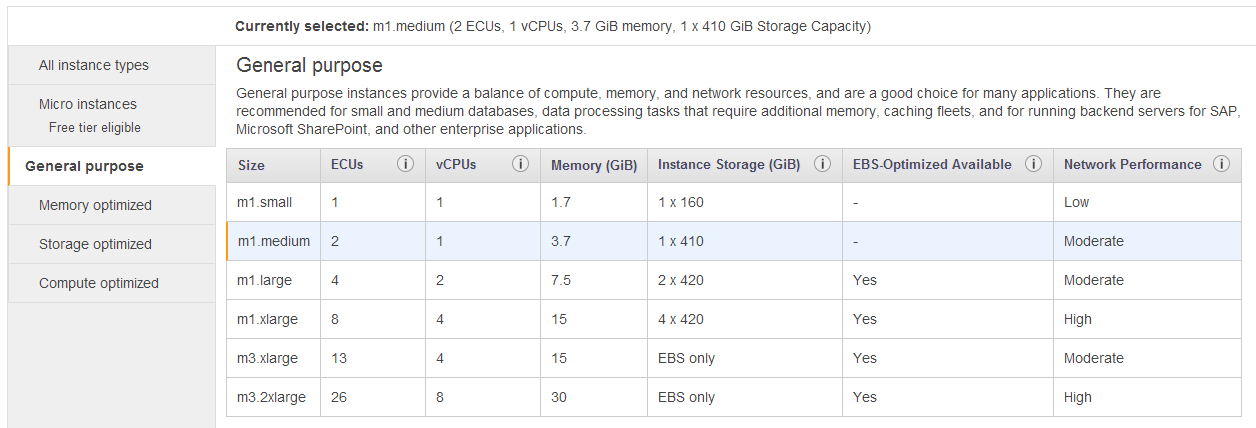

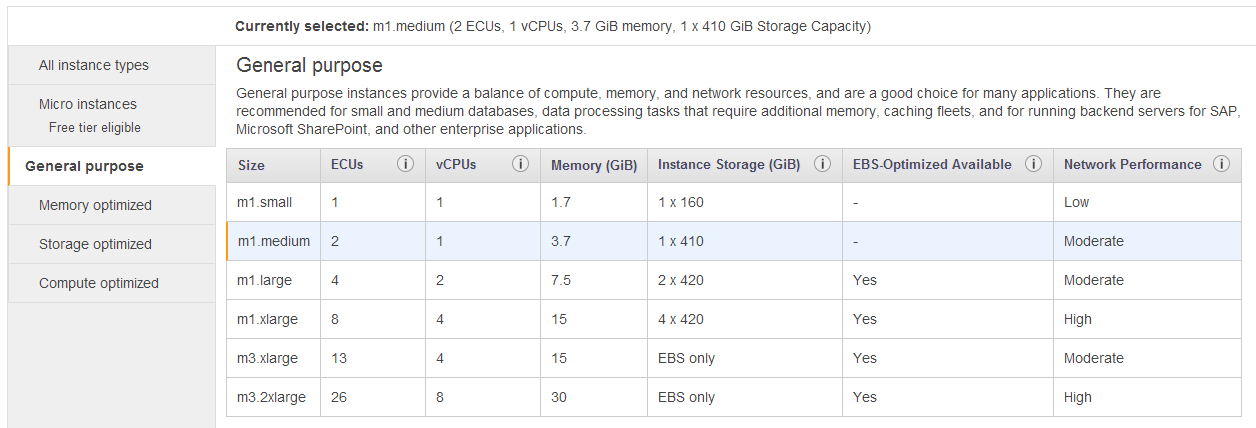

On the next page, you’ll be asked to choose an Instance Type. This is like asking how powerful you want your computer to be. I would suggest choosing one of the General Purpose instances. Choose at least the General Purpose m1.medium instance. Don’t be lured by the ‘Free Tier’ instances in hopes that you can get through this without spending just a tiny amount of money. (You can’t.)

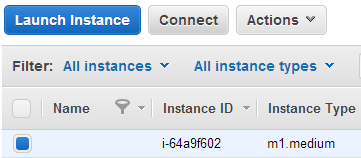

Here’s what my selection looked like:

Now click ‘Configure Instance Details’ in the lower right corner to move on to the next screen.

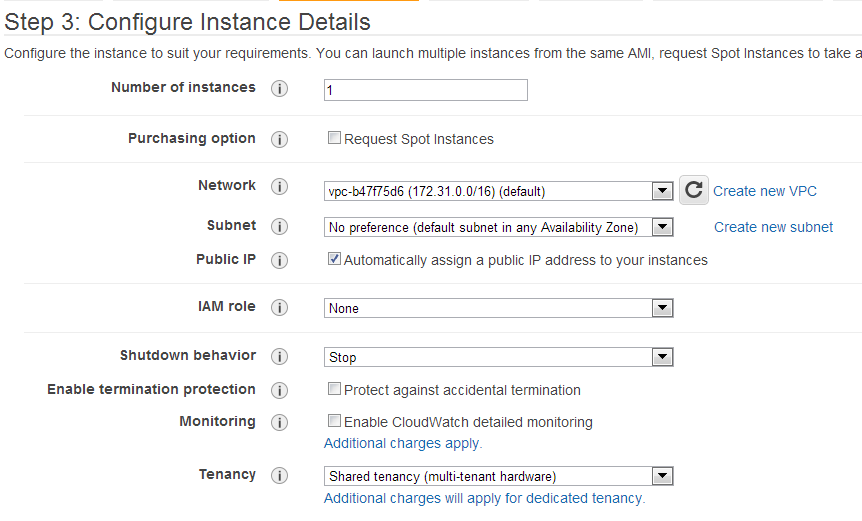

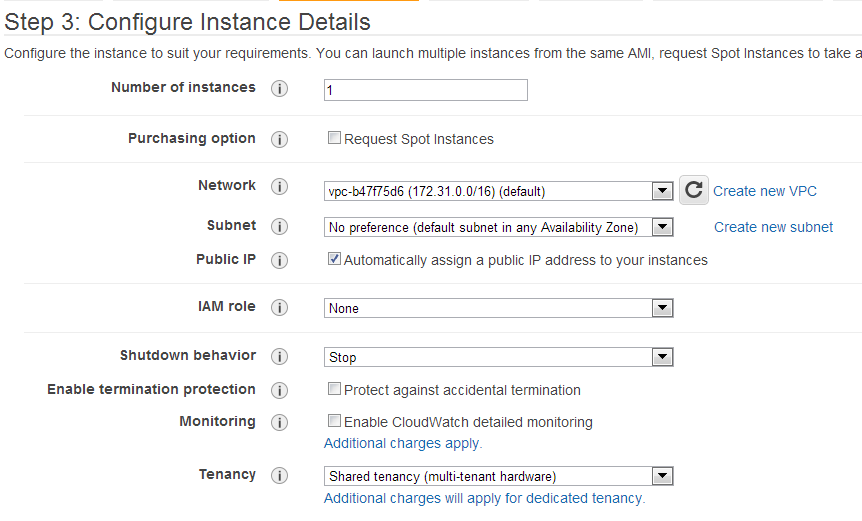

Leave everything default on the Configure Instance Details Page. Then click the ‘Next: Add Storage’ button.

Now you should be on the ‘Add Storage’ page. This one is important, so make sure you get all the details correct. Think of this section as adding your database. You’re going to be adding a copy (snapshot) of Census Reporter’s database.

Choose EBS for Type. Choose /dev/sdc for Device. Type: snap-f17240e0 into Snapshot. Type 500 into Size (GB), and click on the box that says ‘Delete on Termination’.

Then click the button on the lower right that says ‘Next: Tag Instance’.

Keep everything default on this page, then go to next page: ‘Next: Configure Security Group’.

We’ll revisit the Security Group settings in a few more minutes. For now, leave everything default. Let’s get this show on the road. Click ‘Review and Launch’ here, and then on the next page, click ‘Launch’.

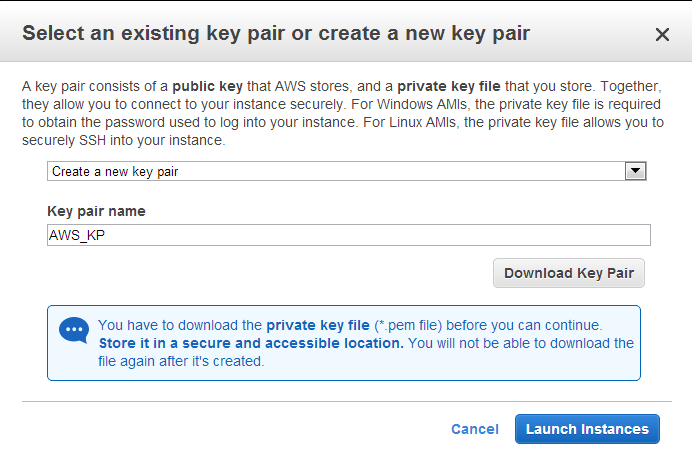

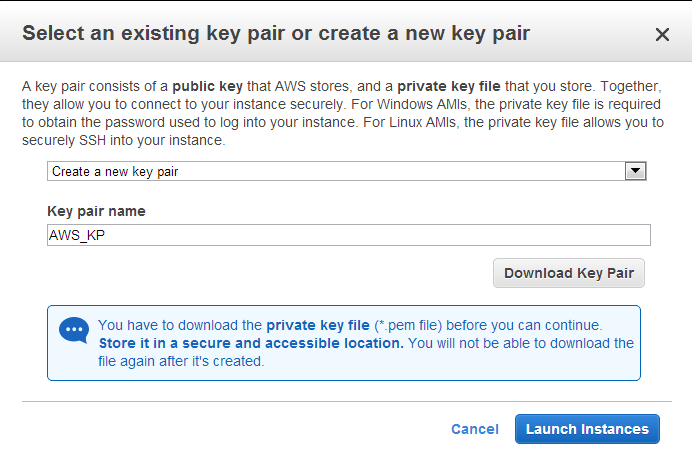

Next up you’ll get a dialog box about Key Pairs. You will need this file to be able to log in to your instance. Choose ‘Create New Key Pair’ in the first drop-down list. Name your Key Pair something hopefully not as obscure as ‘AWS_KP’ and click the ‘Download Key Pair’ button. You should probably get some sort of Save File notification. Put this file somewhere that you will remember! This is important!

Lastly, press the ‘Launch Instances’ button.

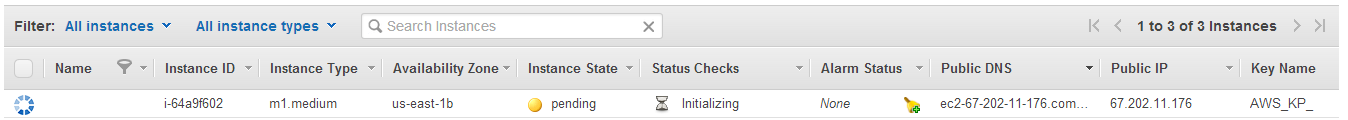

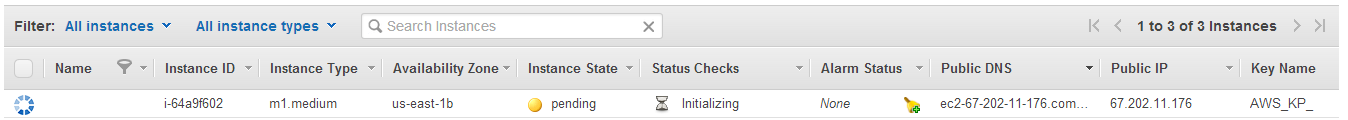

The next page will let you know that Amazon is setting up your Instance. Click the blue ‘View Instances’ button in the lower corner of the page.

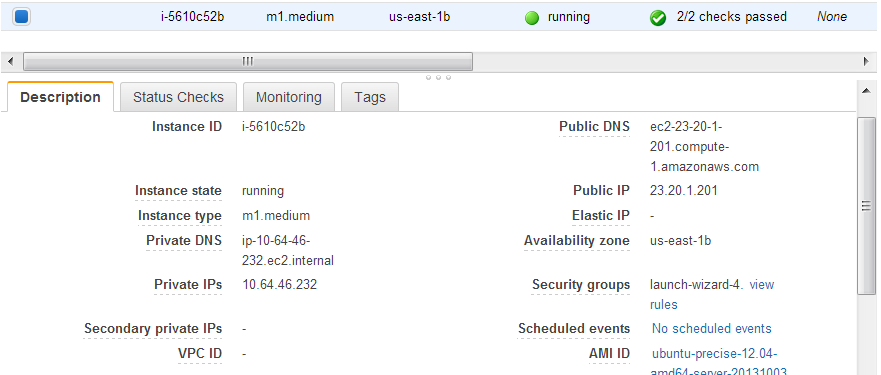

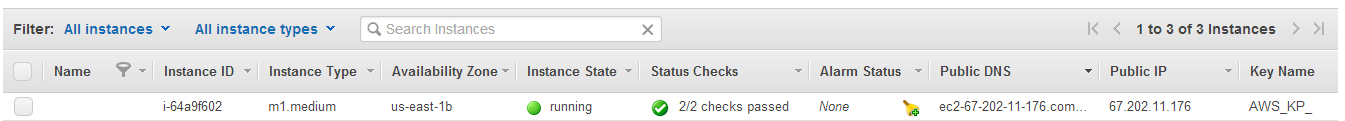

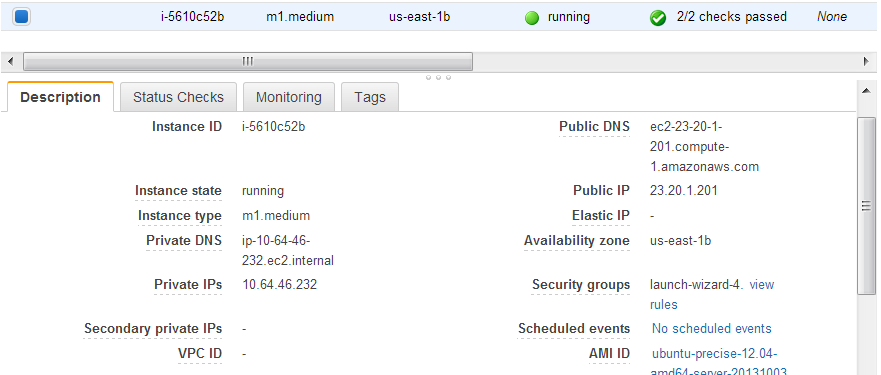

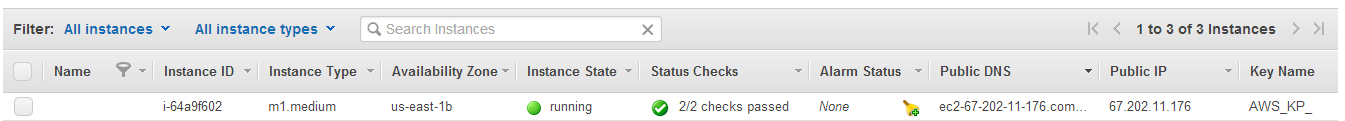

Keep an eye on this screen. Once the ‘Status Checks’ field reads ‘2/2 checks passed’, then you’re ready for Step 3. (This will take a few minutes).

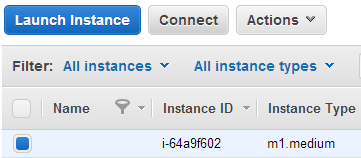

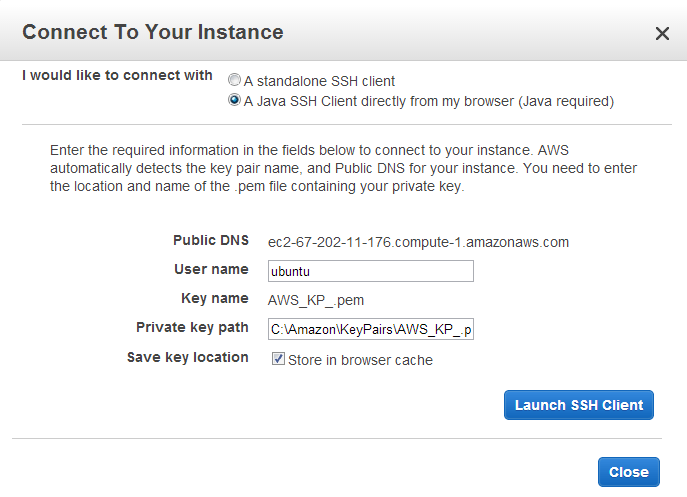

Step 3. Now that your instance has been created by Amazon, you’re going to be typing a few commands so that everything will be connected behind the scenes. Check the box next to your instance, and press the ‘Connect’ button.

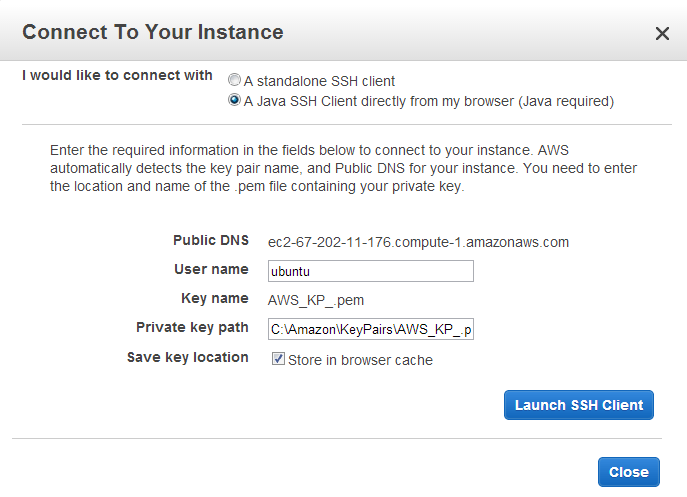

The next dialog box will ask you how you plan to connect. Change the selected option to: ‘A Java SSH Client directly from my browser’.

Find the key on your hard drive. Type in the exact path: (in my case) ‘C:AmazonKeyPairsAWS_KP_.pem’. Then press ‘Launch SSH Client’.

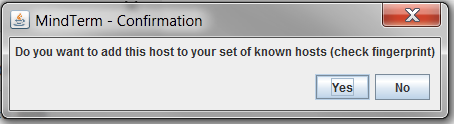

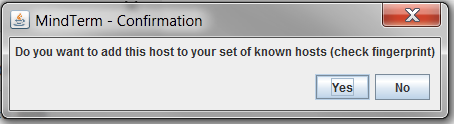

A small dialog box will pop up the first time that you connect this way, asking if you want to add this host to your set of known hosts. Click ‘Yes’.

(We can ‘Close’ our ‘Connect your Instance’ dialog box now. We won’t need it anymore.)

Even if you’re fairly techy, this may be the part where you feel as if you’re falling down the rabbit hole. Just hang in there. I’ll try to let you know what’s going on in plain English. (And where I have no clue, I’ll just give you the next instruction).

The dreaded command line dialog opens up. So where are we? Right now, we’re talking directly to our cloud computer, which is a Linux machine. (Thus we’ll have to speak in a language quite different than what we’re used to).

The dreaded command line dialog opens up. So where are we? Right now, we’re talking directly to our cloud computer, which is a Linux machine. (Thus we’ll have to speak in a language quite different than what we’re used to).

This is the first command we (very carefully) type in:

curl -fsSL https://raw.github.com/censusreporter/census-postgres-scripts/master/00_connect_ebs_volume.sh | tail -n 3 | sed 's/xvdf/xvdc/g' | sh

When that is finished, enter this (all on one line):

curl -fsSL https://raw.github.com/censusreporter/census-postgres-

scripts/c1a8cefb6e1afda7f5f706c15b7fef43d114b242/01_set_up_postgresql.sh | tail -n 9 | sudo sh

This will process for a while. (Note: this script is different from the one suggested at the Census Reporter site. For whatever reason, the script from their instructions no longer works. The one here – which does work as of 1/18/14 – is essentially a link to a previous version of their script. I’ll update this post as needed as versions change. )

Be patient and wait until you’re greeted with a ‘~$’ prompt. When that happens, we will attempt to log into the database. Type in:

psql -U census -h localhost postgres

When prompted for your password, type in: censuspassword

(Note that your keypresses are being recorded, even though nothing is shown).

So what just happened? The database (a PostgreSQL database) was installed and configured. In the last line you typed, you actually logged into the database. (You’ll know that you’re successfully logged in if the command prompt changed to ‘postgres=> ‘ ).

If you were a super advanced user, you could stop there. For the rest of us who prefer not to work at the command line, you will need to change some security settings so that you can interact with the database in a more familiar environment. The catch is that you’ll be altering the security settings of the cloud computer in a Linux environment.

We’re not actually going to be working in PostgreSQL for now, so we can type the following command to exit the database and return to the Linux command prompt:

q

Step 4. We’ll need to change some security group settings. Go back to the ‘Instances’ web page, check the box next to your Instance, and look down at the tabbed area below.

Take a look where it says ‘Security Groups’ on the right hand side. Mine says ‘launch-wizard-4′. Yours will say something different (most likely). Take note of this name.

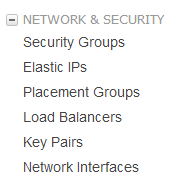

Next, on the left side of the page, locate where it says ‘Security Groups’ (under the NETWORK & SECURITY section). Click there.

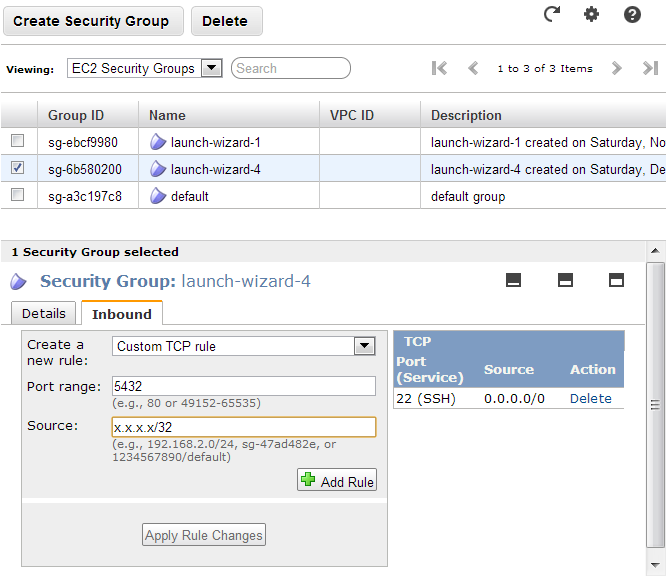

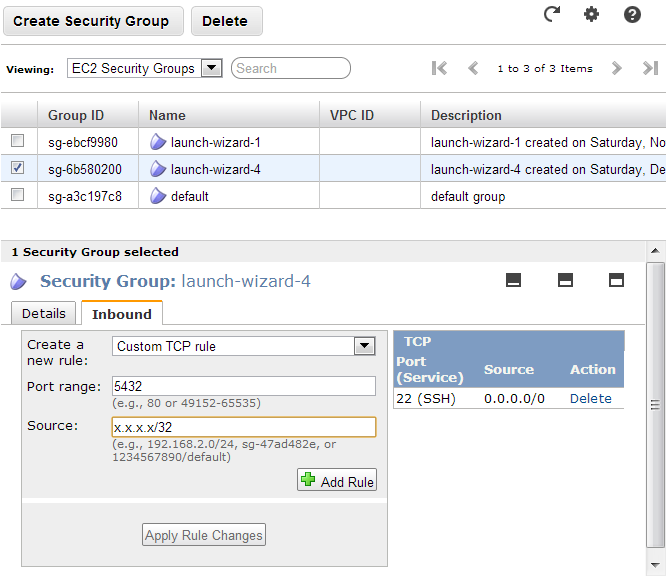

On the Security Group page, click on the Security Group Name we noted previously. Then, on the bottom click on the Inbound tab. Type 5432 into the Port Range. Then for the Source, enter in your IP address, followed by /32. (essentially, replace the x.x.x.x with your IP address. If you don’t know what your IP address is, you can literally google ‘What is my IP address?’ and Google will tell you.)

Don’t forget to press the Add Rule button, then the Apply Rule Changes button.

Step 5. This is the hardest step. You’re going to need to alter some files on the server. Let’s return to the command line. (You may need to Connect again on the Instances page as you did previously).

You first need to navigate to the directory with the configuration files. Type in:

cd /etc/postgresql/9.1/main

then type in:

ls

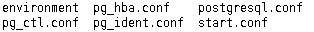

The command ls will list the files in your current directory. If all went well, you should see the following:

Next up, we’re going to be editing the pg_hba.conf file using a program called Vim. The command to edit this file with Vim is:

sudo vi pg_hba.conf

This will open up the file for editing. Now press the ‘i’ key on your keyboard. This toggles Insert mode, and will allow you to make edits.

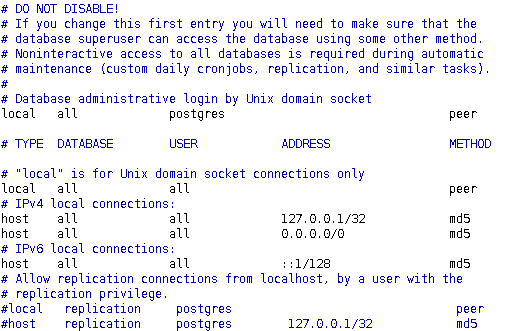

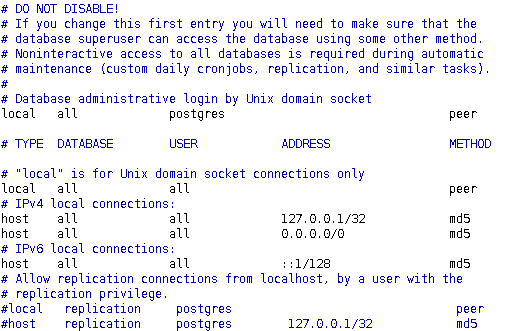

Scroll down (with the arrow keys) and edit the file so that it looks like the following:

Essentially, what you’re doing is finding the line that reads:

host all all 127.0.0.1/32 md5

And adding the following line below it:

host all all 0.0.0.0/0 md5

Now to save and quit, Press ESCAPE, then type:

:wq

This should take you back to the familiar command prompt.

We still have one more file to edit, postgresql.conf. Type:

sudo vi postgresql.conf

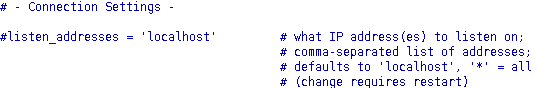

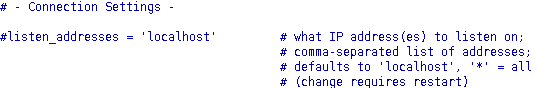

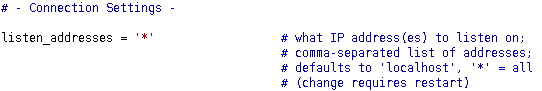

Scroll down to the line that looks like this:

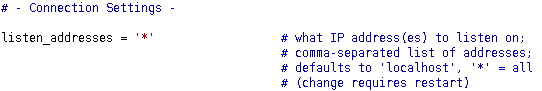

And edit it (remember to press the ‘i’ key to enter Insert mode) to look like this:

Now to save and quit, Press ESCAPE, then type:

:wq

Breath a sigh of relief, you’re almost done. The last step is to restart PostgreSQL to incorporate these changes. Type:

sudo /etc/init.d/postgresql restart

Then you’re done with the command line. Lastly, type:

exit

And close the window.

Step 6. Let’s connect to Microsoft Access!

Not so fast! You need to install a driver first. Go to this page to download the PostgreSQL ODBC driver that will allow you to connect a PostgreSQL database to Microsoft Access. My version of MS Access is 32-bit, so I chose the 32-bit version of the latest driver psqlodbc_09_02_0100.zip . (In retrospect, perhaps I should have chosen the 9.1 version of the driver, since that is the version of PostgreSQL on the Linux machine. No matter, there seems to be some backwards compatibility because it works fine.)

Download and install that driver. Your mileage may vary slightly from these directions if you have a 64 bit version of MS Access.

Step 7. NOW Let’s connect to Microsoft Access!

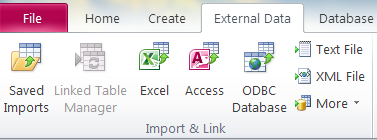

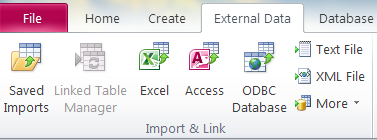

Open MS Access and create a New Blank Database. Depending on your version of MS Access, these instructions may vary slightly. For me (using the Ribbon in Access 2010), I click on the ‘External Data’ section and then click on the ‘ODBC Database’ Icon.

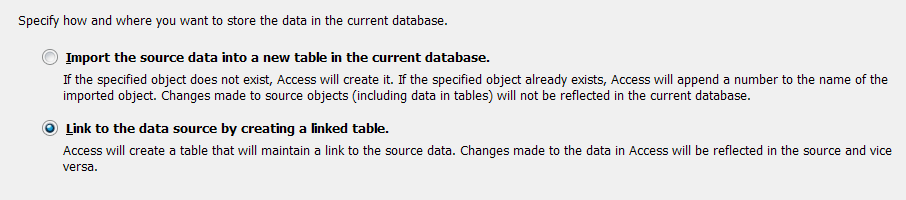

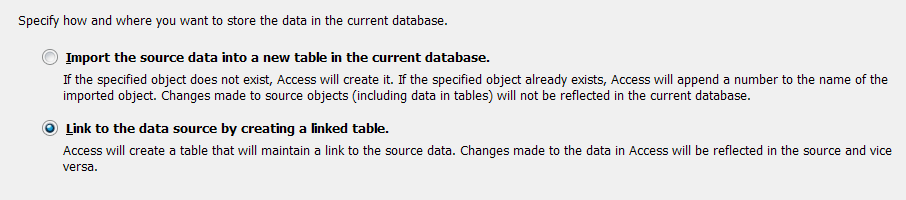

Then you’ll select ‘Link to the data source by creating a linked table’. (You can choose to Import the data instead – your database will just fill up faster because you will actually be downloading the data from Amazon, rather than just referencing it).

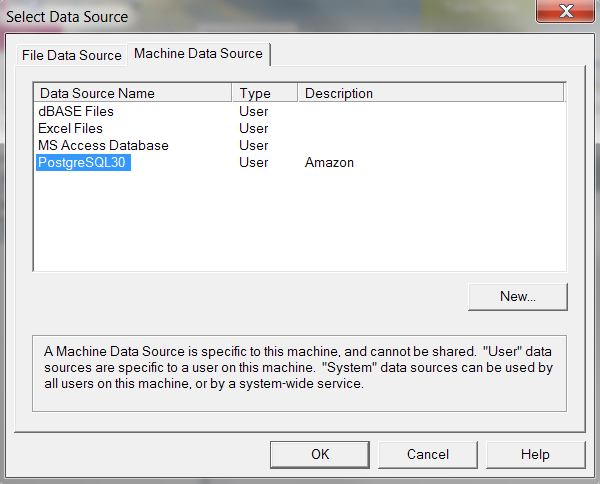

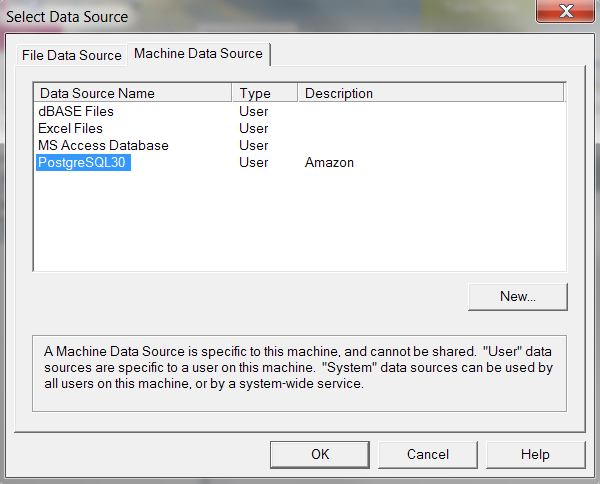

Then you’ll click the Machine Data Source tab, and press the ‘New…’ button.

You can choose whichever option that you want for this dialog, depending on your User Account preferences. Press Next.

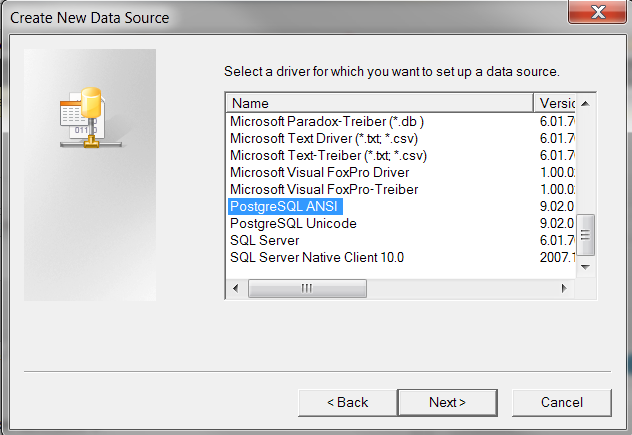

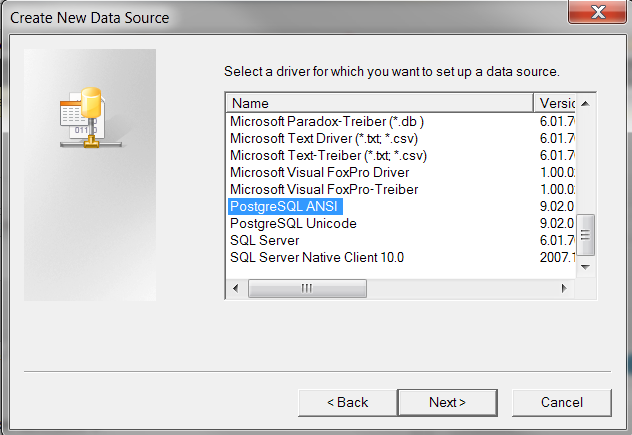

On the ‘Create New Data Source’ dialog, scroll down and select PostgreSQL ANSI, and then press Next.

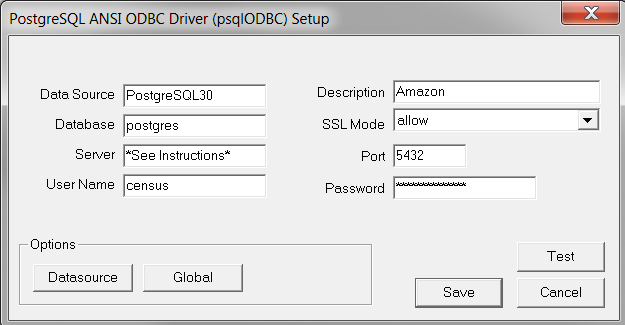

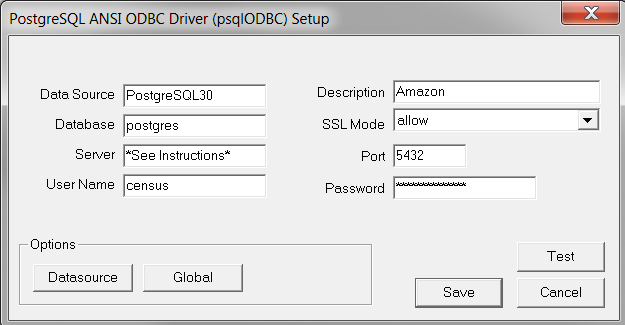

Click ‘Finish’. Here’s the next dialog:

Fill out the dialog box exactly how it shows in the picture above, with two important notes;

1. The password is censuspassword.

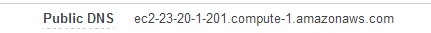

2. For the Server information, you’ll need to return to your EC2 instance webpage, click your instance, and look in the top right of the information panel for your Public DNS information.

3. Click the Datasource button and Uncheck ‘Use Declare/Fetch’. Press Apply then OK.

Press Test. Hopefully you’ll get a popup dialog that says ‘Connection successful’.

Press Okay, then press Save.

Now, back at the ‘Select Data Source’ dialog, highlight ‘PostgreSQL30′ and press ‘OK’.

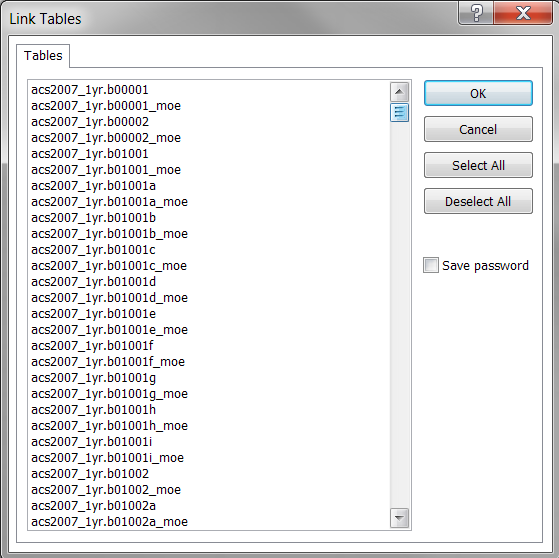

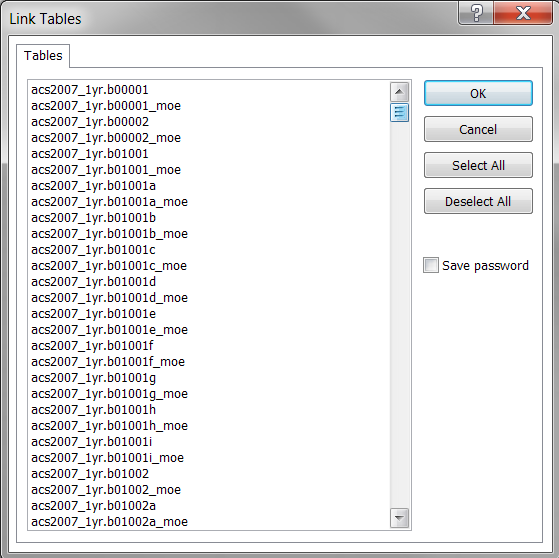

Voila! Hundreds of ACS Tables! You can probably take it from here. Don’t forget, if you’re curious about which data tables are in which files, you can look that up easily in the documentation files on the Census FTP site.

Final Notes: To avoid being charged extra, make sure to terminate your instance when you’re done with it. You can choose to stop your instance instead (so that you won’t have to run through this process again), but stopping an instance – while saving some money – will not stop you from being charged entirely. Amazon will still be charging you for database storage (the EBS volume).

Stopping and starting your instance will very likely change your public DNS. Thus be prepared to change your MS Access PostgreSQL login information each time you do this.

Advanced Users: Instructions on connecting to pgAdmin and on using PHP to connect to the database are forthcoming. Much of the setup instructions (minus the MS Access pieces) are the same.

The dreaded command line dialog opens up. So where are we? Right now, we’re talking directly to our cloud computer, which is a Linux machine. (Thus we’ll have to speak in a language quite different than what we’re used to).

The dreaded command line dialog opens up. So where are we? Right now, we’re talking directly to our cloud computer, which is a Linux machine. (Thus we’ll have to speak in a language quite different than what we’re used to).